Introduction

There is a lot of hype around A.I. as it’s being presented as the next candidate for a ground-breaking technological disruption.

While this is certainly happening for some specific areas such as the smart-home devices ecosystem, others like biometric authentication still need technological refinements to make the authentication process as secure as possible.

In the current technological landscape, A.I. is playing a major role in trying to provide user-friendly and more secure bio-metrics authentication schemes including but not limited to Face and Voice authentication.

Although many financial institutes like HSBC are starting to use voice to authenticate its users (HSBC, n.d.), this method of identifying users still cannot be considered as secure as using more robust biometrics authentication solutions such as fingerprint or Iris. This is due to the fact that, although a person’s voice can be considered unique according to some researchers (Trilok, 2004), it is still very trivial to fool current state-of-the-art voice authentication systems (Simmons, 2017).

The research

During the last few years a lot of attention has been brought around new ways to bypass the current state-of-the-art voice authentication mechanisms.

For example, Baidu has announced a new AI system that can mimic a subject’s voice after training itself on less than a minute of audio snippets.

These security threats can be categorised as ‘voice impersonation’ attacks whereby attackers try to steal or impersonate another person’s voice by either using previously recorded samples of the original voice or with the help of machine learning techniques such as Deep Learning.

In general, here is how a voice impersonation attack works:

- Collect samples in person or online

- Build a model of the victim’s speech through Deep Neural Networks

- Once the model is built use it to say virtually anything in the form of the victim’s voice. This is made possible by using Generative adversarial networks (GANs) which are a class of artificial intelligence algorithms that generate fake data from scratch. Refer to the following resource for more information: https://medium.com/@jonathan_hui/gan-whats-generative-adversarial-networks-and-its-application-f39ed278ef09

Proof of Concept

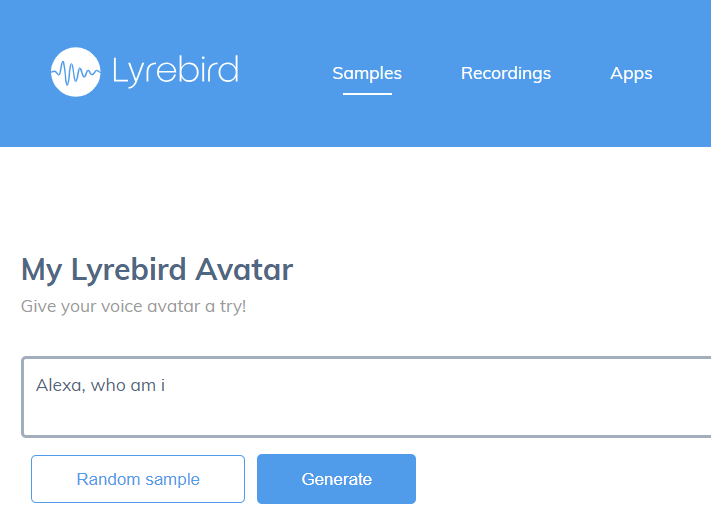

In this blog post we demonstrate how easy it is to bypass the Amazon Echo/Google Home voice recognition feature with the Lyrebird technology (https://lyrebird.ai), which claims to be able to mimic any voice from as little as a minute of audio recording. According to Lyrebird’s researchers they manage to create the “most realistic artificial voices in the world” by using artificial neural networks that rely on deep-learning techniques. In particular, to create new data which is based on an initial training set (that could be a set of images, videos or in this case voices), Generative Adversarial Networks (GANs) algorithms are used to create a new output that is similar to the provided input (e.g. a digital voice that resembles the original voice).

In the following videos you can see how it was possible to bypass the voice recognition feature on the Amazon Echo and Goggle Home devices.

To create a digital clone of a person’s voice the following steps were followed:

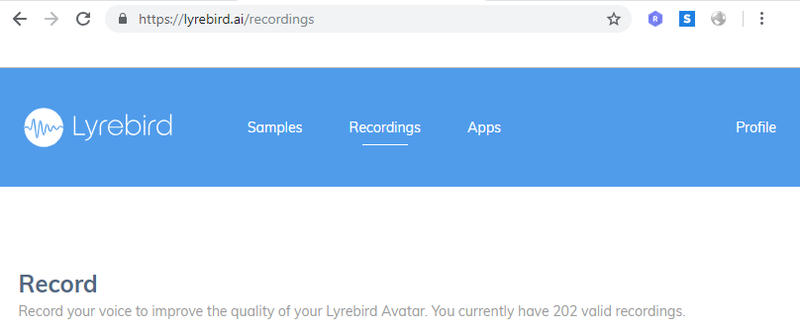

- An account on Lyrebird.com was created

- Lyrebird was trained by recording over 200+ audio samples (the system requires only 30 audio samples to generate a voice. However, the more the recording the more accurate the digital voice will be)

- a digital voice sample was generated by using Lyrebird’s text-to-speech engine

In the following video you can see how it was possible to bypass the voice recognition feature on the Amazon Echo device using the Lyrebird service. It is worth noting that it was not possible to bypass Google Home devices by using the same technique but that does not mean it cannot be bypassed by using an alternative or similar approach. It also played an important role that, for the purpose of this testing, no dedicated or high quality microphones were used in order to better simulate a real-world attack scenario.

It is worth noting that both Google and Amazon don’t treat this as a security issue as these are not advertised/implemented as security features. In other words they are not used to provide any form of voice authentication but only as a mean to receive different instructions or requests and respond appropriately depending on the voice that’s speaking. It is also worth noting though that Google’s Voice Match allows users to create different users profiles with custom information including payments methods.

We think it’s important to draw more attention to this potential issue as to better protect users from the new and emerging threats affecting the A.I. powered biometric authentication platforms (this could be of particular concern for financial businesses that rely on A.I. biometric authentication schemes).

References

HSBC. (n.d.). Voice ID. Retrieved from HSBSC: https://ciiom.hsbc.com/ways-to-bank/phone-banking/voice-id/

Simmons, D. (2017, May 19). BBC fools HSBC voice recognition security system. Retrieved from BBC: https://www.bbc.co.uk/news/technology-39965545

Trilok, C. T. (2004, May 7). Establishing the Uniqueness of the Human Voice for Security. CSSIS Student/Faculty Research Day, (p. 6). Retrieved from http://www.csis.pace.edu/~ctappert/srd2004/paper08.pdf